Back

Fine-Tune GPT-4o Vision Models for Image Classification

Unlock Higher Accuracy in GPT-4o Vision Models with Fine-Tuning for Your Custom Data

Oct 7, 2024

OpenAI's GPT-4o series has been offering a multimodal model capable of processing both text and images. While GPT-4o performs well across general vision tasks, its performance on domain-specific datasets sometimes fall short.

OpenAI launched support for Vision fine-tuning capabilities, providing the ability to enhance the performance of the model by fine-tuning the model to your custom dataset.

Thanks for reading Outliers! Subscribe for free to receive new posts and support my work.Subscribed

At Cortal Insight, our mission is to accelerate the machine learning experiments, helping data scientists save time on repetitive data tasks. One of the exciting developments we're exploring is the ability to fine-tune GPT-4o vision models for custom datasets that are specific to industries like manufacturing, retail, and robotics.

Why Fine-Tune GPT-4o for Vision Tasks?

GPT-4o models have proven powerful at handling multimodal tasks (text + images). However, for highly domain-specific data, such as detecting surface defects in manufacturing or monitoring quality control in retail, general-purpose models might not deliver optimal performance. Fine-tuning GPT-4o models to your specific visual dataset allows you to achieve higher accuracy for tasks like defect detection, visual inspections, and beyond.

Testing GPT-4o on Domain-Specific Data

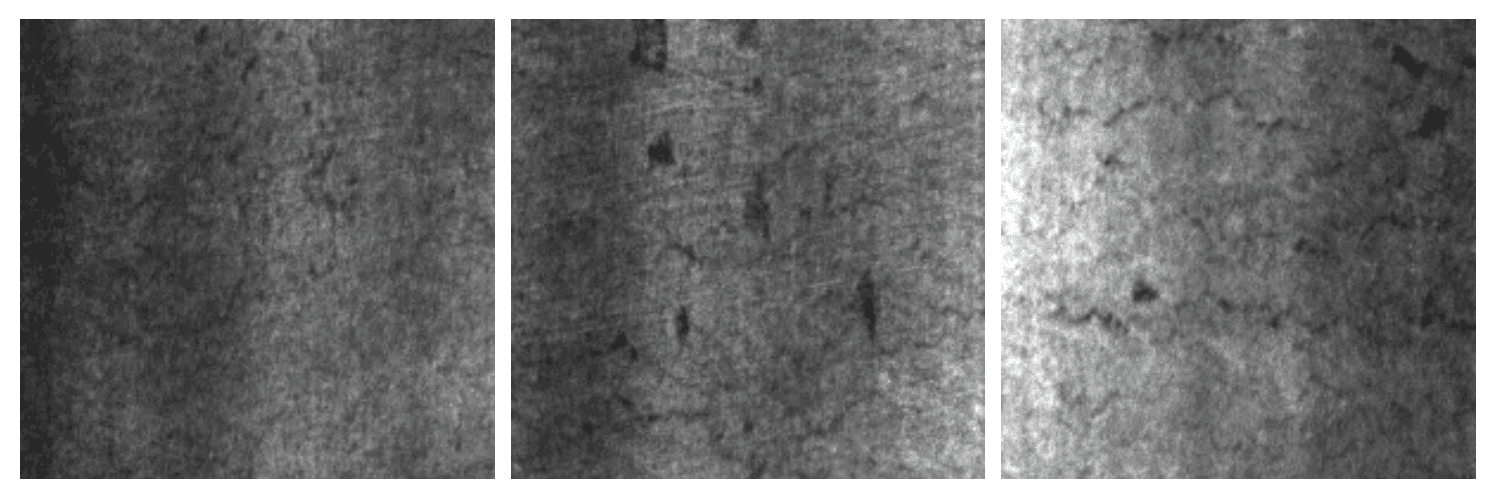

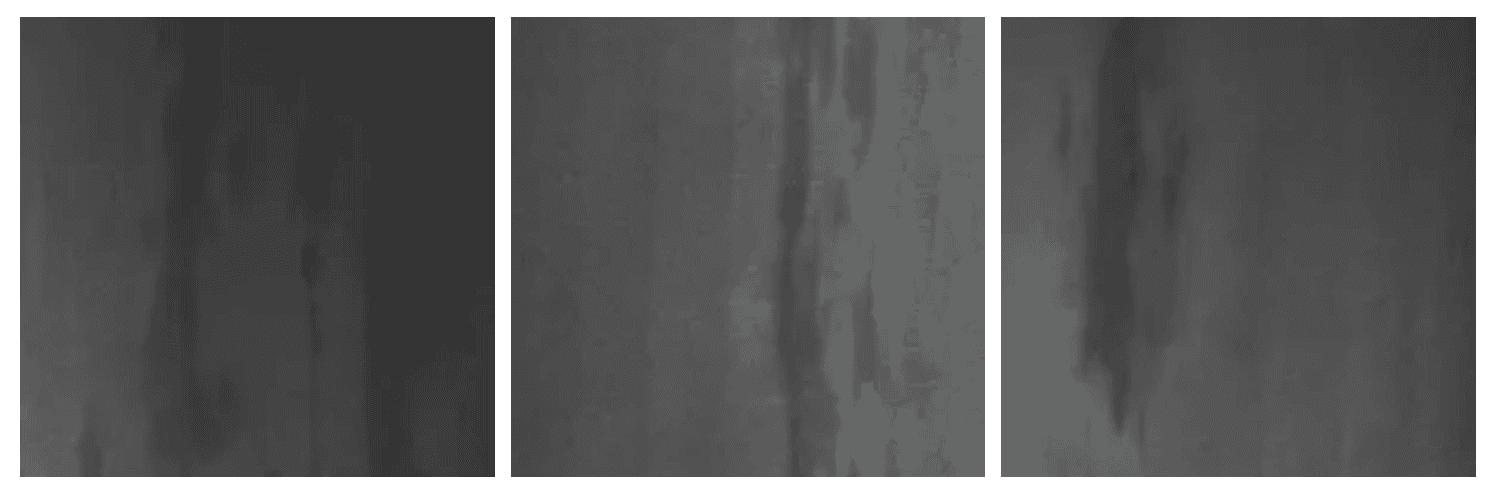

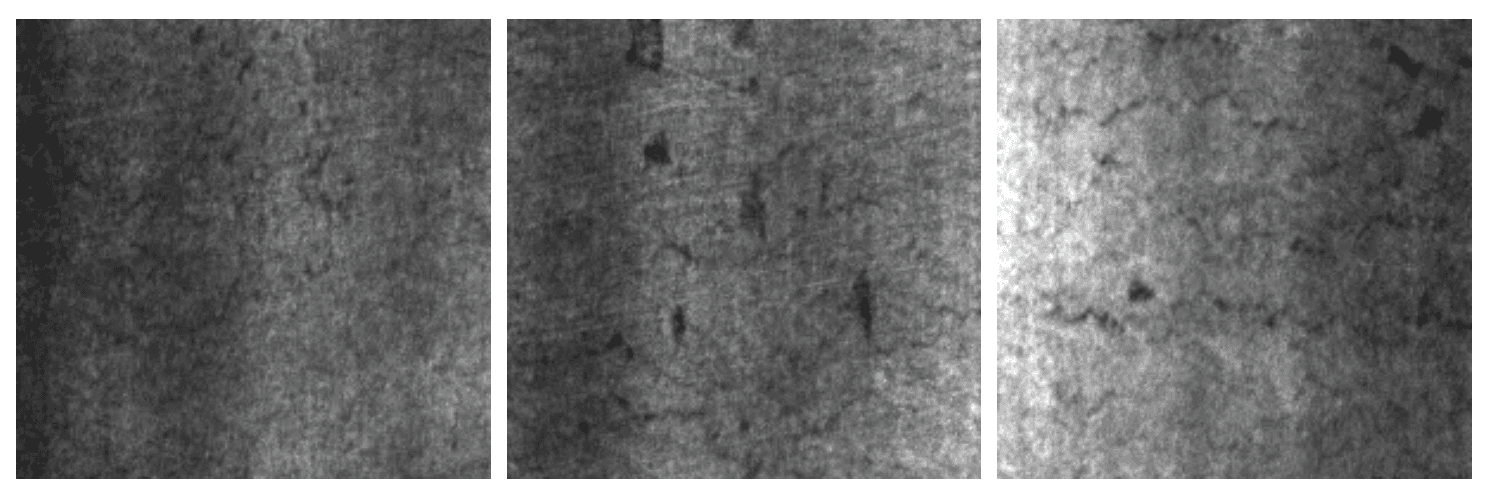

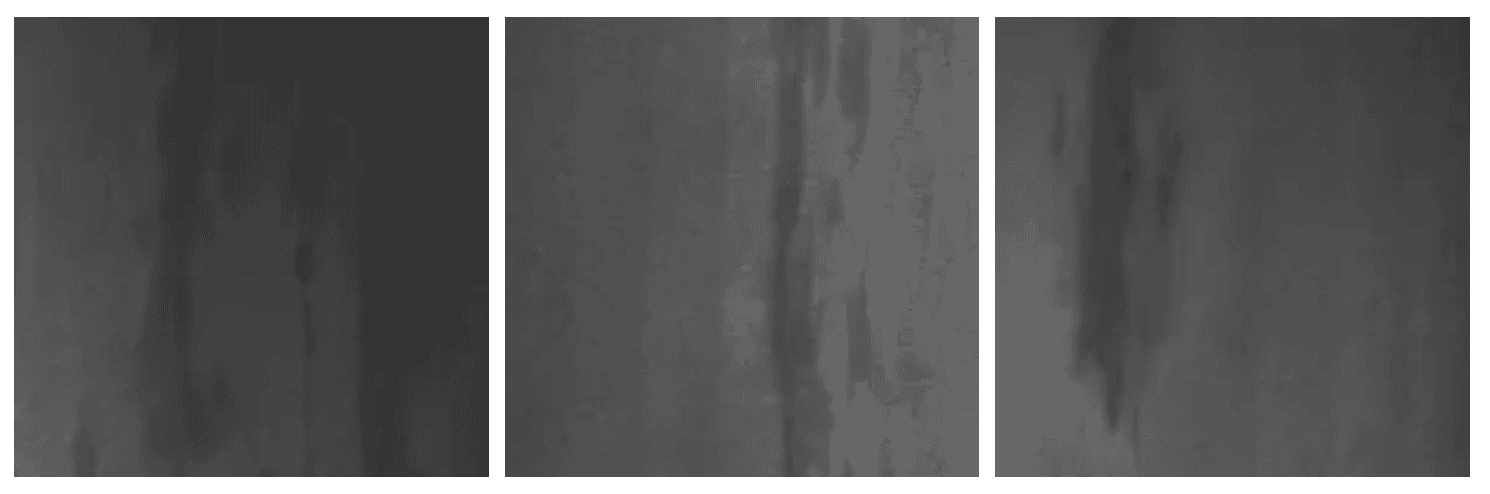

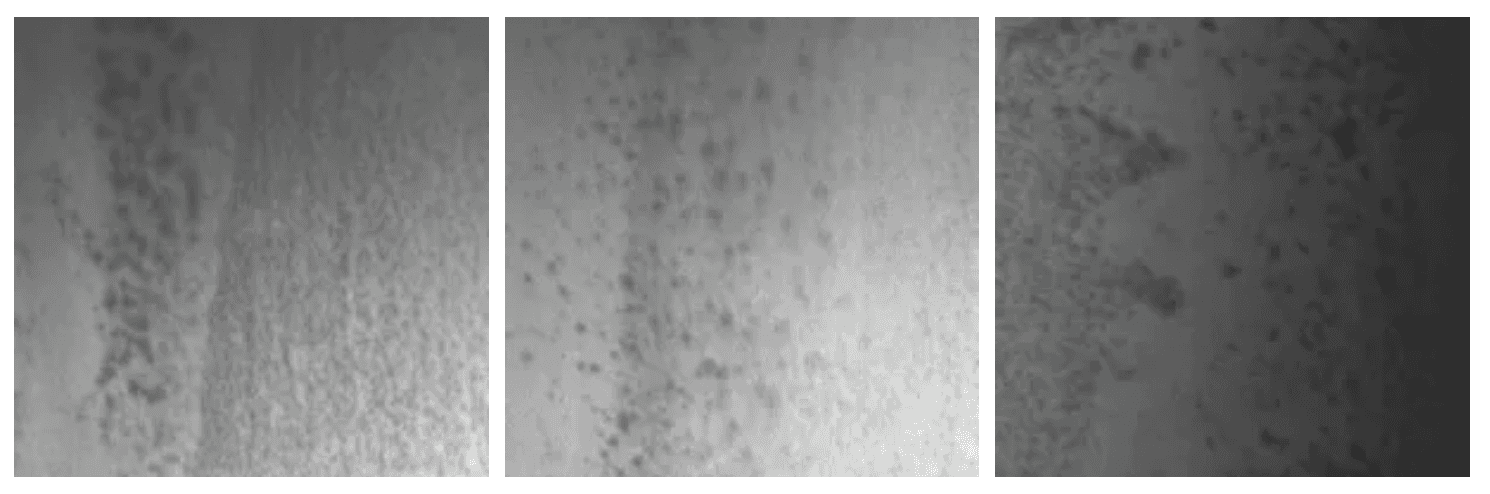

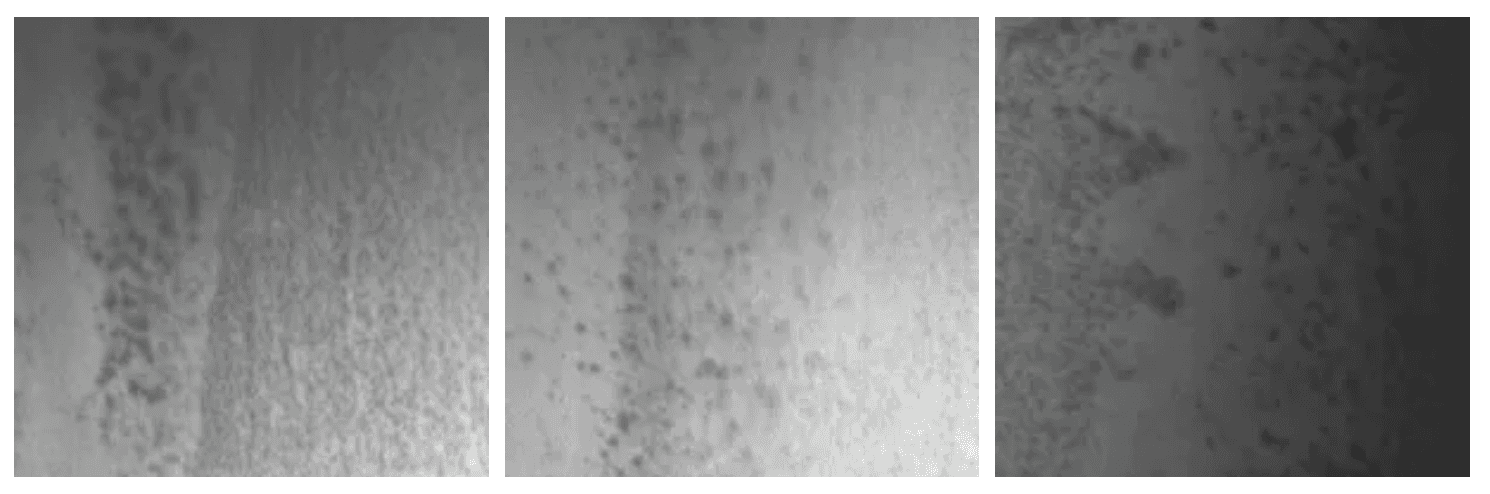

Before jumping into fine-tuning, it’s always good practice to test the model’s current performance. To begin, you can test out-of-the-box GPT-4o models on a dataset of your choice. For example, using the NEU surface defect dataset (available publicly), GPT-4o shows strong classification performance for certain classes like crazing and pitted surface defects, but struggles with others like inclusion defects.

Test results on crazing defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: pitted_surface

Test results on inclusion defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

We are using a small subset of classes from this dataset to test. You can test GPT-4o’s classification abilities using a simple instruction prompt:

classes = ['pitted_surface', 'inclusion', 'crazing', 'unclear']

INSTRUCTION_PROMPT = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. " "You must always return only one option from that list."

"If you are not sure, choose 'unclear'."

Then, run your prediction using this prompt:

def predict(self, INSTRUCTION_PROMPT: str, base64_image: str) -> str:

payload = [

{

"role": "user",

"content":

[

{ "type": "text", "text": INSTRUCTION_PROMPT },

{ "type": "image_url", "image_url": { "url": f"data:image/jpeg;base64,{base64_image}" } }

], } ]

try:

response = self.client.chat.completions.create( model="gpt-4o", messages=payload, max_tokens=300, )

result = response.choices[0].message.content

return result

except Exception as e:

print(f"Error during API prediction: {e}")

return "Prediction failed"

def classify_single_image(self, image_path: str):

instruction_prompt = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. "

"You must always return only one option from that list."

"If you are not sure, choose 'unclear'." )

# Load and classify a single image

image = self.load_image(image_path)

if image:

base64_img = self.image_to_base64(image)

if base64_img:

result = self.predict(instruction_prompt, base64_img)

print(f"Classification result for {image_path}: {result}")

else: print("Failed to convert image to base64.")

else: print("Failed to load image."

This testing gives you a baseline of the model's performance before fine-tuning.

Prepare the Dataset for Fine-Tuning

OpenAI's fine-tuning API requires images to be passed either as a URL or encoded as base64, with the following message structure:

For URL-based images:

"messages":

[

{ "role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": { "url": "https://example.com/image.jpg" } }

]

}

]For base64-encoded images:

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": {"url": "data:image/jpeg;base64,..." }}

]

}

]To fine-tune GPT-4o, your image dataset must be structured this manner. Easily convert you custom data by first organizing your dataset as follows:

root_directory/

│

├── class1/ │

├── image1.jpg │

├── image2.png │

└── ...

├── class2/ │

├── image1.jpeg

├── image2.webp

└── ...

└── classN/

├── image1.jpg

├── image2.png

└──

Note:

Supported formats include .jpeg, .jpg, .png, and .webp. Ensure that all images are in RGB or RGBA mode, as required by GPT-4o. These checks are handled by the script.

Once your dataset is properly structured, it’s time to convert your images into the format required by GPT-4o. The classification_data_workflow.py script handles this for you. You’ll need to update the following fields:

main_directory = "/path/to/images" # Your dataset directory

output_file = "prepared_dataset_classification.jsonl" # JSONL file

api_key = "{Insert API key here}" # Your OpenAI API keyThen run the script:

python3 classification_data_workflow.pyThis script will:

Process each image and convert it to base64 format.

Create a JSONL file that matches the required structure.

Enforce size and image constraints (e.g., a maximum of 10 images per example, with a total dataset size limit of 8 GB).

Uploading Your Dataset

Now that your dataset is ready, it’s time to upload it for fine-tuning. OpenAI’s API can handle both large or small datasets, and the finetune_uploader module makes this easy.

def upload_dataset_node(api_key, file_path):

uploaded_file_id = upload_dataset(api_key, file_path)

if uploaded_file_id:

print(f"Dataset uploaded successfully. File ID: {uploaded_file_id}")

return uploaded_file_id

else: print("File upload failed.")

return NoneThe script will automatically:

Select the appropriate upload method (single file or multipart upload) based on the size of your dataset.

Upload the dataset for fine-tuning

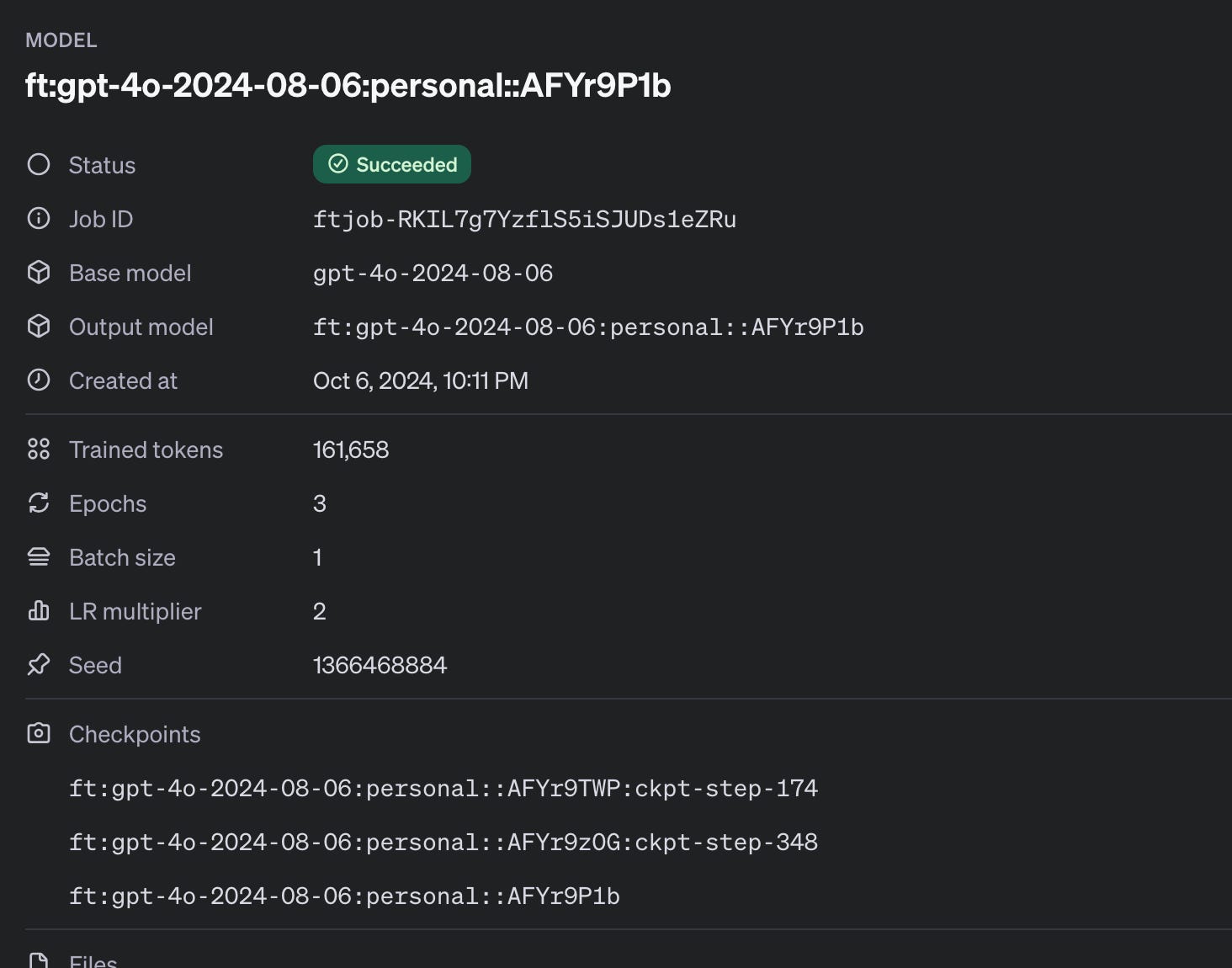

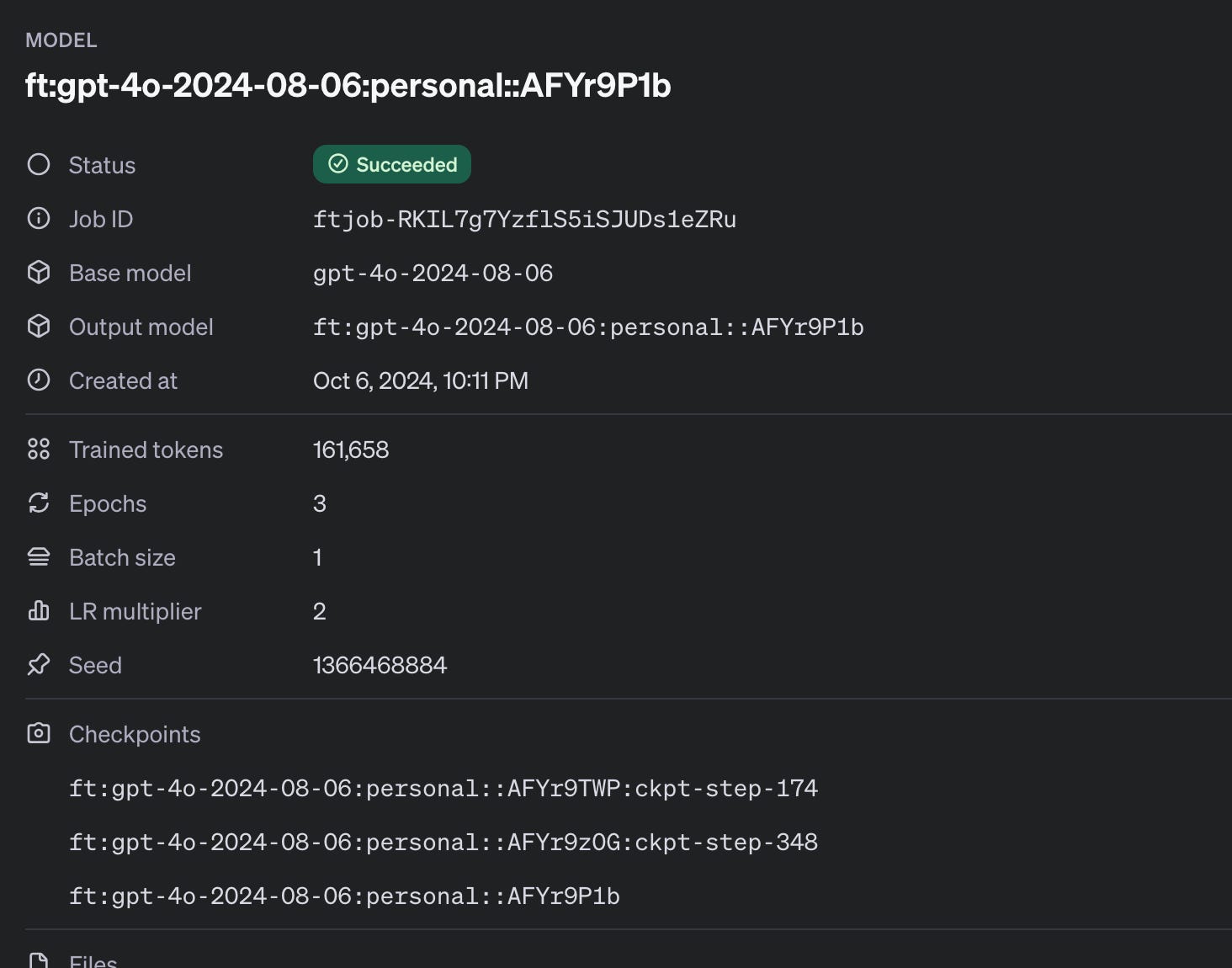

Fine-Tuning GPT-4o on Your Custom Dataset

Once the dataset is uploaded, you can submit the fine-tuning job. Simply call the start_fine_tuning_job function provided in the finetune_uploader.py script.

def submit_finetuning_job(api_key, uploaded_file_id, model="gpt-4o-2024-08-06"):

if uploaded_file_id:

start_fine_tuning_job(api_key, uploaded_file_id, model)

else:

print("No uploaded file to fine-tune.")Monitor the Fine-Tuning Process

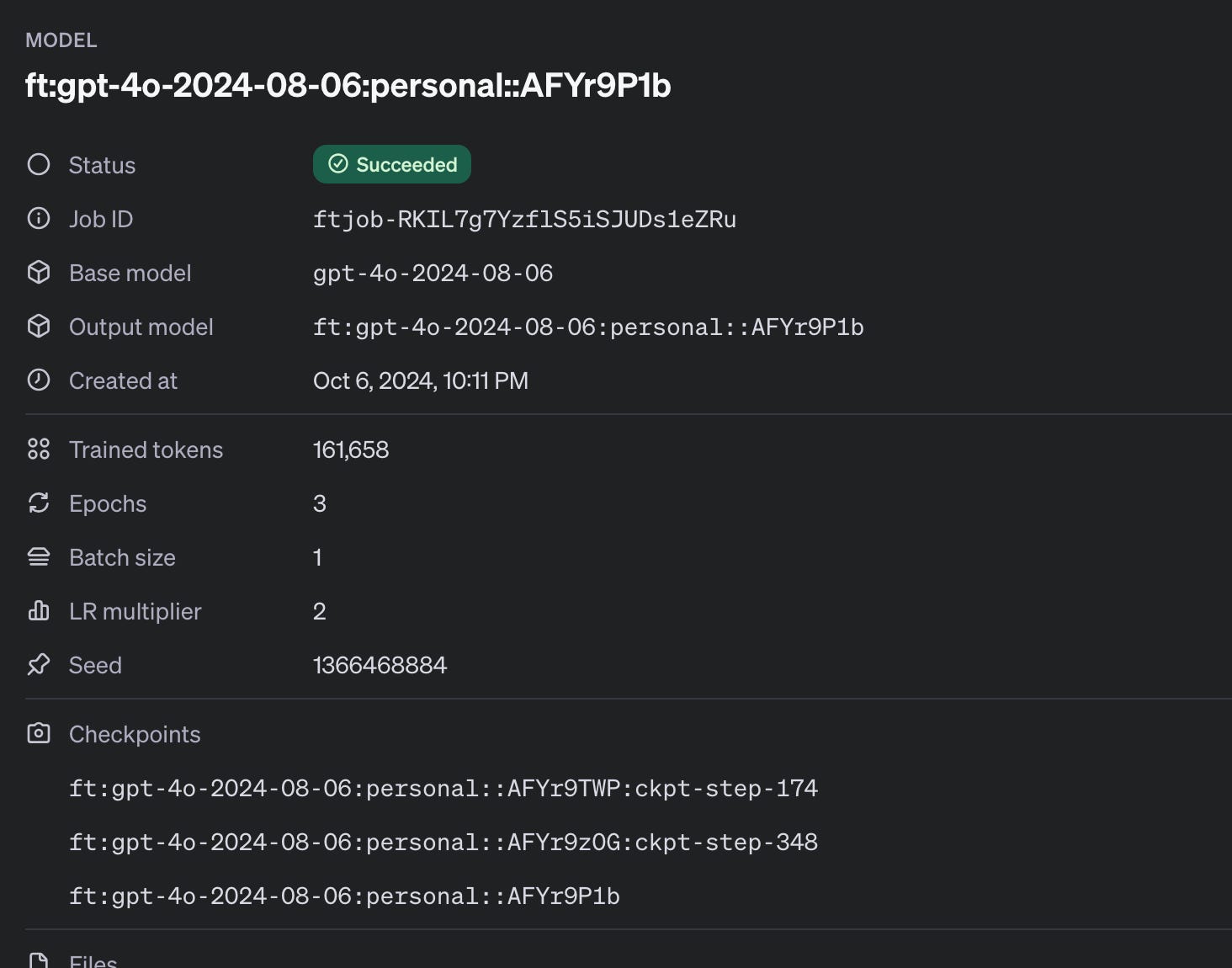

While GPT-4o is fine-tuning, you can monitor the progress through the OpenAI console or API. Once the fine-tuning is complete, you’ll have a customized GPT-4o model fine-tuned for your custom dataset to perform image classification tasks.

Please note that fine-tuning GPT-4o models, as well as using OpenAI's API for processing and testing, may incur significant costs depending on the size of your dataset and the duration of your fine-tuning jobs.

By following this guide, you’ll have your own custom GPT-4o model tailored to your specific vision tasks. You can explore the full code repository here.

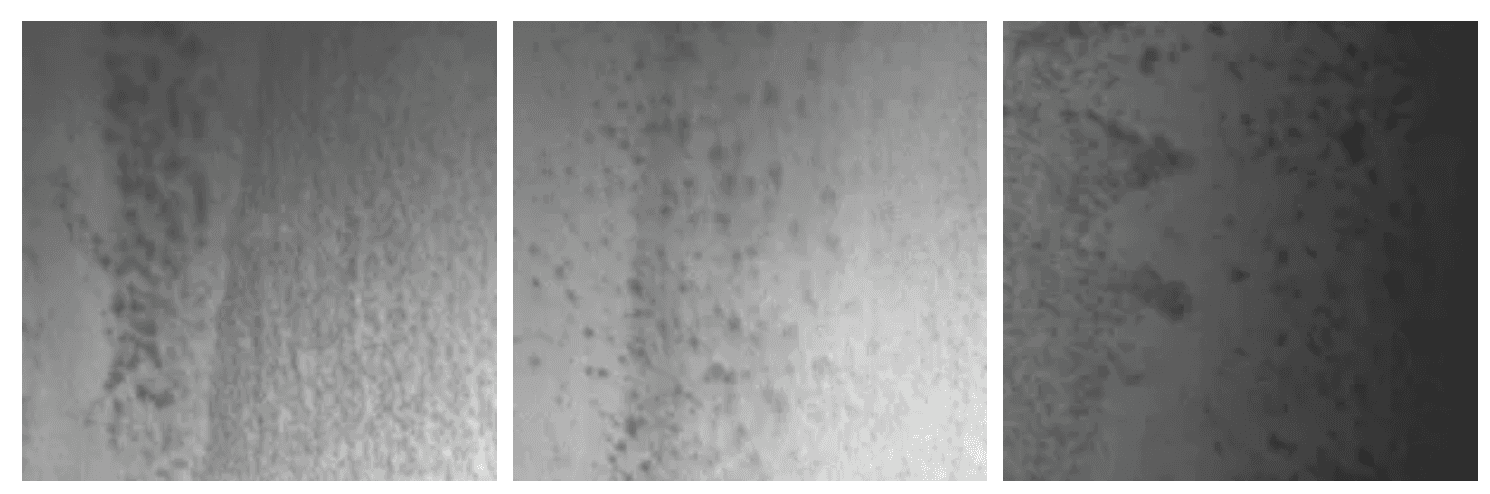

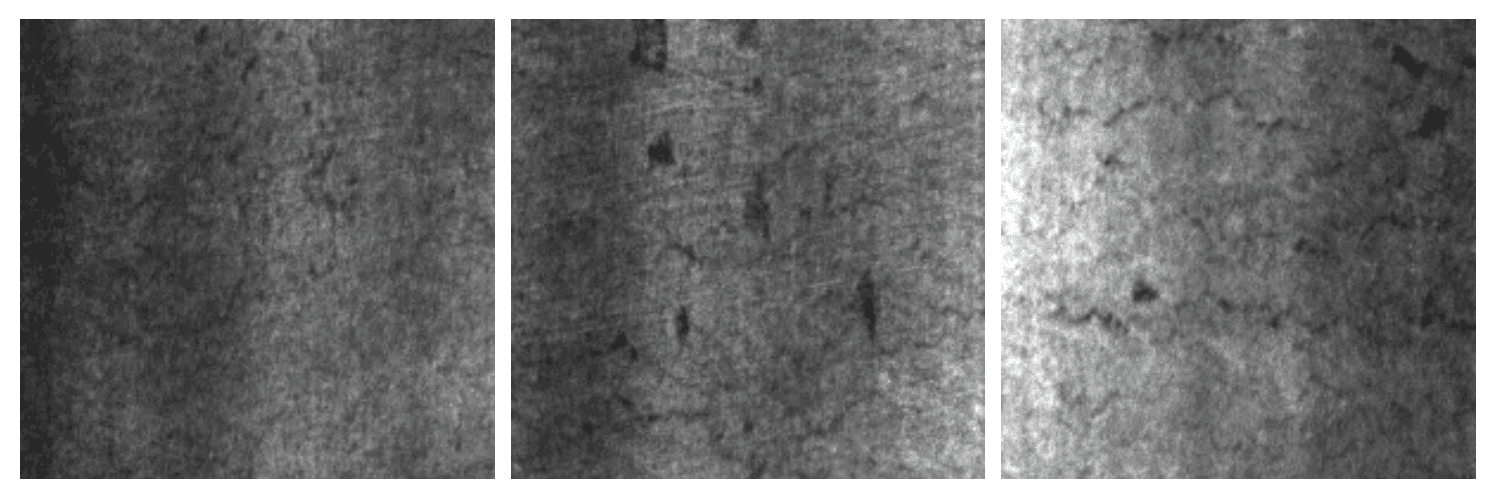

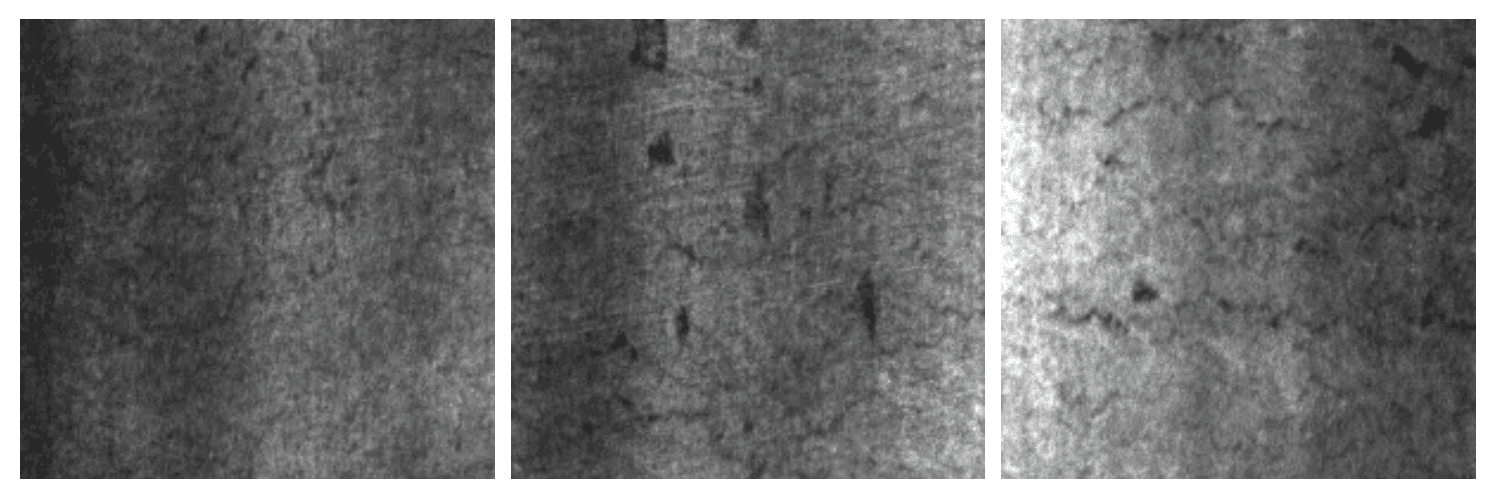

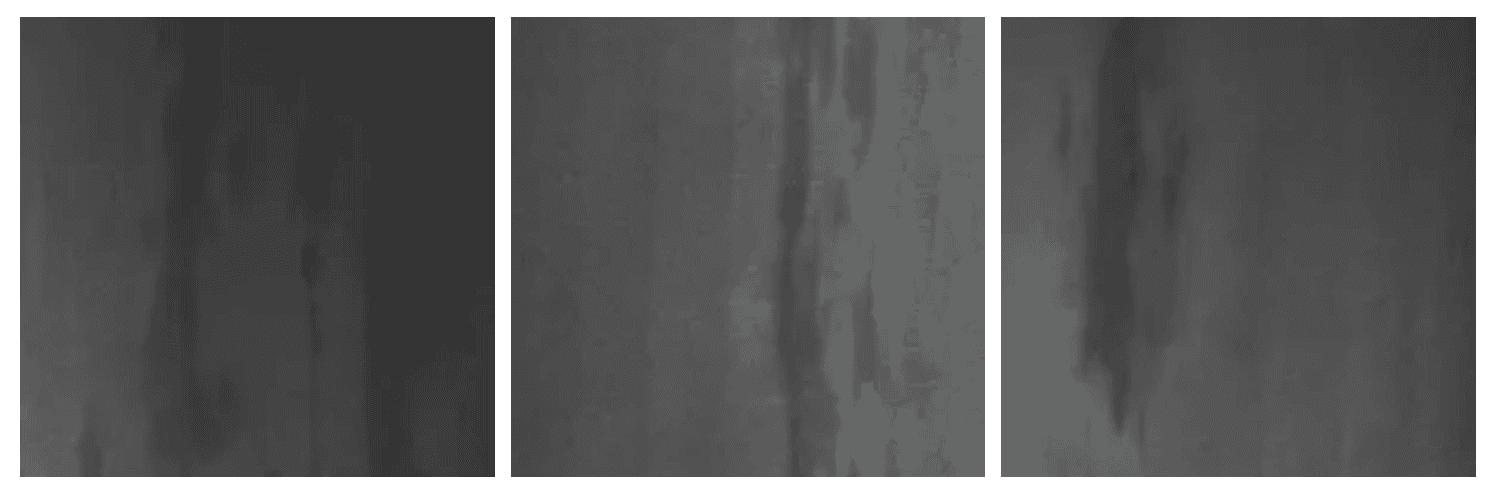

Testing the Fine-Tuned GPT-4o Model

Once your fine-tuning job is complete, you can use the newly trained model to test its classification performance on new images from your custom dataset using test_image_classification.py Before testing, you need to update the model to specify the fine-tuned model to use (available in fine-tune jobs).

model = 'ft:gpt-4o-2024-08-06:personal::AFYr9P1b'

Test results on crazing defect type: a) Predicted: crazing b) Predicted: crazing c) Predicted: crazing

Test results on inclusion defect type: a) Predicted: inclusion b) Predicted: inclusion c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

Conclusion

By fine-tuning GPT-4o on your custom dataset, you can significantly improve its performance for domain-specific tasks, such as visual inspection and defect detection in manufacturing. But it comes with its own set of challenges, especially when working with small datasets. By carefully structuring your dataset and leveraging OpenAI's fine-tuning workflows, you can optimize model performance and address issues like overfitting, class imbalance, and limited variation.

OpenAI's GPT-4o series has been offering a multimodal model capable of processing both text and images. While GPT-4o performs well across general vision tasks, its performance on domain-specific datasets sometimes fall short.

OpenAI launched support for Vision fine-tuning capabilities, providing the ability to enhance the performance of the model by fine-tuning the model to your custom dataset.

Thanks for reading Outliers! Subscribe for free to receive new posts and support my work.Subscribed

At Cortal Insight, our mission is to accelerate the machine learning experiments, helping data scientists save time on repetitive data tasks. One of the exciting developments we're exploring is the ability to fine-tune GPT-4o vision models for custom datasets that are specific to industries like manufacturing, retail, and robotics.

Why Fine-Tune GPT-4o for Vision Tasks?

GPT-4o models have proven powerful at handling multimodal tasks (text + images). However, for highly domain-specific data, such as detecting surface defects in manufacturing or monitoring quality control in retail, general-purpose models might not deliver optimal performance. Fine-tuning GPT-4o models to your specific visual dataset allows you to achieve higher accuracy for tasks like defect detection, visual inspections, and beyond.

Testing GPT-4o on Domain-Specific Data

Before jumping into fine-tuning, it’s always good practice to test the model’s current performance. To begin, you can test out-of-the-box GPT-4o models on a dataset of your choice. For example, using the NEU surface defect dataset (available publicly), GPT-4o shows strong classification performance for certain classes like crazing and pitted surface defects, but struggles with others like inclusion defects.

Test results on crazing defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: pitted_surface

Test results on inclusion defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

We are using a small subset of classes from this dataset to test. You can test GPT-4o’s classification abilities using a simple instruction prompt:

classes = ['pitted_surface', 'inclusion', 'crazing', 'unclear']

INSTRUCTION_PROMPT = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. " "You must always return only one option from that list."

"If you are not sure, choose 'unclear'."

Then, run your prediction using this prompt:

def predict(self, INSTRUCTION_PROMPT: str, base64_image: str) -> str:

payload = [

{

"role": "user",

"content":

[

{ "type": "text", "text": INSTRUCTION_PROMPT },

{ "type": "image_url", "image_url": { "url": f"data:image/jpeg;base64,{base64_image}" } }

], } ]

try:

response = self.client.chat.completions.create( model="gpt-4o", messages=payload, max_tokens=300, )

result = response.choices[0].message.content

return result

except Exception as e:

print(f"Error during API prediction: {e}")

return "Prediction failed"

def classify_single_image(self, image_path: str):

instruction_prompt = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. "

"You must always return only one option from that list."

"If you are not sure, choose 'unclear'." )

# Load and classify a single image

image = self.load_image(image_path)

if image:

base64_img = self.image_to_base64(image)

if base64_img:

result = self.predict(instruction_prompt, base64_img)

print(f"Classification result for {image_path}: {result}")

else: print("Failed to convert image to base64.")

else: print("Failed to load image."

This testing gives you a baseline of the model's performance before fine-tuning.

Prepare the Dataset for Fine-Tuning

OpenAI's fine-tuning API requires images to be passed either as a URL or encoded as base64, with the following message structure:

For URL-based images:

"messages":

[

{ "role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": { "url": "https://example.com/image.jpg" } }

]

}

]For base64-encoded images:

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": {"url": "data:image/jpeg;base64,..." }}

]

}

]To fine-tune GPT-4o, your image dataset must be structured this manner. Easily convert you custom data by first organizing your dataset as follows:

root_directory/

│

├── class1/ │

├── image1.jpg │

├── image2.png │

└── ...

├── class2/ │

├── image1.jpeg

├── image2.webp

└── ...

└── classN/

├── image1.jpg

├── image2.png

└──

Note:

Supported formats include .jpeg, .jpg, .png, and .webp. Ensure that all images are in RGB or RGBA mode, as required by GPT-4o. These checks are handled by the script.

Once your dataset is properly structured, it’s time to convert your images into the format required by GPT-4o. The classification_data_workflow.py script handles this for you. You’ll need to update the following fields:

main_directory = "/path/to/images" # Your dataset directory

output_file = "prepared_dataset_classification.jsonl" # JSONL file

api_key = "{Insert API key here}" # Your OpenAI API keyThen run the script:

python3 classification_data_workflow.pyThis script will:

Process each image and convert it to base64 format.

Create a JSONL file that matches the required structure.

Enforce size and image constraints (e.g., a maximum of 10 images per example, with a total dataset size limit of 8 GB).

Uploading Your Dataset

Now that your dataset is ready, it’s time to upload it for fine-tuning. OpenAI’s API can handle both large or small datasets, and the finetune_uploader module makes this easy.

def upload_dataset_node(api_key, file_path):

uploaded_file_id = upload_dataset(api_key, file_path)

if uploaded_file_id:

print(f"Dataset uploaded successfully. File ID: {uploaded_file_id}")

return uploaded_file_id

else: print("File upload failed.")

return NoneThe script will automatically:

Select the appropriate upload method (single file or multipart upload) based on the size of your dataset.

Upload the dataset for fine-tuning

Fine-Tuning GPT-4o on Your Custom Dataset

Once the dataset is uploaded, you can submit the fine-tuning job. Simply call the start_fine_tuning_job function provided in the finetune_uploader.py script.

def submit_finetuning_job(api_key, uploaded_file_id, model="gpt-4o-2024-08-06"):

if uploaded_file_id:

start_fine_tuning_job(api_key, uploaded_file_id, model)

else:

print("No uploaded file to fine-tune.")Monitor the Fine-Tuning Process

While GPT-4o is fine-tuning, you can monitor the progress through the OpenAI console or API. Once the fine-tuning is complete, you’ll have a customized GPT-4o model fine-tuned for your custom dataset to perform image classification tasks.

Please note that fine-tuning GPT-4o models, as well as using OpenAI's API for processing and testing, may incur significant costs depending on the size of your dataset and the duration of your fine-tuning jobs.

By following this guide, you’ll have your own custom GPT-4o model tailored to your specific vision tasks. You can explore the full code repository here.

Testing the Fine-Tuned GPT-4o Model

Once your fine-tuning job is complete, you can use the newly trained model to test its classification performance on new images from your custom dataset using test_image_classification.py Before testing, you need to update the model to specify the fine-tuned model to use (available in fine-tune jobs).

model = 'ft:gpt-4o-2024-08-06:personal::AFYr9P1b'

Test results on crazing defect type: a) Predicted: crazing b) Predicted: crazing c) Predicted: crazing

Test results on inclusion defect type: a) Predicted: inclusion b) Predicted: inclusion c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

Conclusion

By fine-tuning GPT-4o on your custom dataset, you can significantly improve its performance for domain-specific tasks, such as visual inspection and defect detection in manufacturing. But it comes with its own set of challenges, especially when working with small datasets. By carefully structuring your dataset and leveraging OpenAI's fine-tuning workflows, you can optimize model performance and address issues like overfitting, class imbalance, and limited variation.

OpenAI's GPT-4o series has been offering a multimodal model capable of processing both text and images. While GPT-4o performs well across general vision tasks, its performance on domain-specific datasets sometimes fall short.

OpenAI launched support for Vision fine-tuning capabilities, providing the ability to enhance the performance of the model by fine-tuning the model to your custom dataset.

Thanks for reading Outliers! Subscribe for free to receive new posts and support my work.Subscribed

At Cortal Insight, our mission is to accelerate the machine learning experiments, helping data scientists save time on repetitive data tasks. One of the exciting developments we're exploring is the ability to fine-tune GPT-4o vision models for custom datasets that are specific to industries like manufacturing, retail, and robotics.

Why Fine-Tune GPT-4o for Vision Tasks?

GPT-4o models have proven powerful at handling multimodal tasks (text + images). However, for highly domain-specific data, such as detecting surface defects in manufacturing or monitoring quality control in retail, general-purpose models might not deliver optimal performance. Fine-tuning GPT-4o models to your specific visual dataset allows you to achieve higher accuracy for tasks like defect detection, visual inspections, and beyond.

Testing GPT-4o on Domain-Specific Data

Before jumping into fine-tuning, it’s always good practice to test the model’s current performance. To begin, you can test out-of-the-box GPT-4o models on a dataset of your choice. For example, using the NEU surface defect dataset (available publicly), GPT-4o shows strong classification performance for certain classes like crazing and pitted surface defects, but struggles with others like inclusion defects.

Test results on crazing defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: pitted_surface

Test results on inclusion defect type: a) Predicted: unclear b) Predicted: unclear c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

We are using a small subset of classes from this dataset to test. You can test GPT-4o’s classification abilities using a simple instruction prompt:

classes = ['pitted_surface', 'inclusion', 'crazing', 'unclear']

INSTRUCTION_PROMPT = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. " "You must always return only one option from that list."

"If you are not sure, choose 'unclear'."

Then, run your prediction using this prompt:

def predict(self, INSTRUCTION_PROMPT: str, base64_image: str) -> str:

payload = [

{

"role": "user",

"content":

[

{ "type": "text", "text": INSTRUCTION_PROMPT },

{ "type": "image_url", "image_url": { "url": f"data:image/jpeg;base64,{base64_image}" } }

], } ]

try:

response = self.client.chat.completions.create( model="gpt-4o", messages=payload, max_tokens=300, )

result = response.choices[0].message.content

return result

except Exception as e:

print(f"Error during API prediction: {e}")

return "Prediction failed"

def classify_single_image(self, image_path: str):

instruction_prompt = ( "You are an inspection assistant for a manufacturing plant. "

"Analyze the provided image of steel surfaces and classify it based on the kind of defect. "

f"There are four classes: {classes}. "

"You must always return only one option from that list."

"If you are not sure, choose 'unclear'." )

# Load and classify a single image

image = self.load_image(image_path)

if image:

base64_img = self.image_to_base64(image)

if base64_img:

result = self.predict(instruction_prompt, base64_img)

print(f"Classification result for {image_path}: {result}")

else: print("Failed to convert image to base64.")

else: print("Failed to load image."

This testing gives you a baseline of the model's performance before fine-tuning.

Prepare the Dataset for Fine-Tuning

OpenAI's fine-tuning API requires images to be passed either as a URL or encoded as base64, with the following message structure:

For URL-based images:

"messages":

[

{ "role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": { "url": "https://example.com/image.jpg" } }

]

}

]For base64-encoded images:

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{ "type": "image_url", "image_url": {"url": "data:image/jpeg;base64,..." }}

]

}

]To fine-tune GPT-4o, your image dataset must be structured this manner. Easily convert you custom data by first organizing your dataset as follows:

root_directory/

│

├── class1/ │

├── image1.jpg │

├── image2.png │

└── ...

├── class2/ │

├── image1.jpeg

├── image2.webp

└── ...

└── classN/

├── image1.jpg

├── image2.png

└──

Note:

Supported formats include .jpeg, .jpg, .png, and .webp. Ensure that all images are in RGB or RGBA mode, as required by GPT-4o. These checks are handled by the script.

Once your dataset is properly structured, it’s time to convert your images into the format required by GPT-4o. The classification_data_workflow.py script handles this for you. You’ll need to update the following fields:

main_directory = "/path/to/images" # Your dataset directory

output_file = "prepared_dataset_classification.jsonl" # JSONL file

api_key = "{Insert API key here}" # Your OpenAI API keyThen run the script:

python3 classification_data_workflow.pyThis script will:

Process each image and convert it to base64 format.

Create a JSONL file that matches the required structure.

Enforce size and image constraints (e.g., a maximum of 10 images per example, with a total dataset size limit of 8 GB).

Uploading Your Dataset

Now that your dataset is ready, it’s time to upload it for fine-tuning. OpenAI’s API can handle both large or small datasets, and the finetune_uploader module makes this easy.

def upload_dataset_node(api_key, file_path):

uploaded_file_id = upload_dataset(api_key, file_path)

if uploaded_file_id:

print(f"Dataset uploaded successfully. File ID: {uploaded_file_id}")

return uploaded_file_id

else: print("File upload failed.")

return NoneThe script will automatically:

Select the appropriate upload method (single file or multipart upload) based on the size of your dataset.

Upload the dataset for fine-tuning

Fine-Tuning GPT-4o on Your Custom Dataset

Once the dataset is uploaded, you can submit the fine-tuning job. Simply call the start_fine_tuning_job function provided in the finetune_uploader.py script.

def submit_finetuning_job(api_key, uploaded_file_id, model="gpt-4o-2024-08-06"):

if uploaded_file_id:

start_fine_tuning_job(api_key, uploaded_file_id, model)

else:

print("No uploaded file to fine-tune.")Monitor the Fine-Tuning Process

While GPT-4o is fine-tuning, you can monitor the progress through the OpenAI console or API. Once the fine-tuning is complete, you’ll have a customized GPT-4o model fine-tuned for your custom dataset to perform image classification tasks.

Please note that fine-tuning GPT-4o models, as well as using OpenAI's API for processing and testing, may incur significant costs depending on the size of your dataset and the duration of your fine-tuning jobs.

By following this guide, you’ll have your own custom GPT-4o model tailored to your specific vision tasks. You can explore the full code repository here.

Testing the Fine-Tuned GPT-4o Model

Once your fine-tuning job is complete, you can use the newly trained model to test its classification performance on new images from your custom dataset using test_image_classification.py Before testing, you need to update the model to specify the fine-tuned model to use (available in fine-tune jobs).

model = 'ft:gpt-4o-2024-08-06:personal::AFYr9P1b'

Test results on crazing defect type: a) Predicted: crazing b) Predicted: crazing c) Predicted: crazing

Test results on inclusion defect type: a) Predicted: inclusion b) Predicted: inclusion c) Predicted: inclusion

Test results on pitted surface defect type: a) Predicted: pitted_surface b) Predicted: pitted_surface c) Predicted: pitted_surface

Conclusion

By fine-tuning GPT-4o on your custom dataset, you can significantly improve its performance for domain-specific tasks, such as visual inspection and defect detection in manufacturing. But it comes with its own set of challenges, especially when working with small datasets. By carefully structuring your dataset and leveraging OpenAI's fine-tuning workflows, you can optimize model performance and address issues like overfitting, class imbalance, and limited variation.

Preetham Rajkumar

Other articles you might like

Learn how to increase your productivity with calendars